Artificial Neural Networks: Structure, Function, and Types

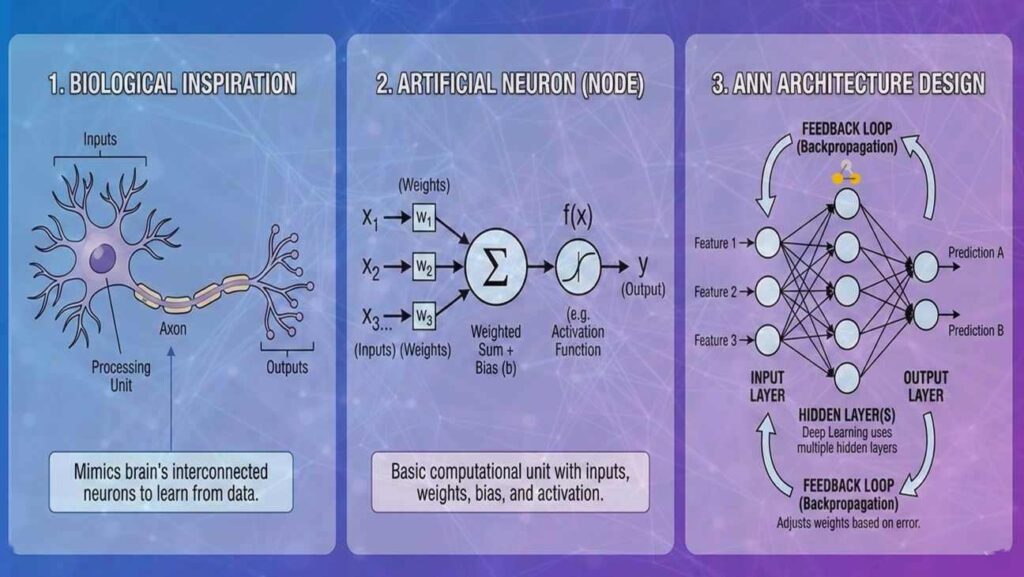

In machine learning, a neural network (NN), also known as an artificial neural network (ANN), is a computational model inspired by biological neural networks, consisting of interconnected units called artificial neurons.

Artificial neural networks (ANNs) are one of the greatest advances in the history of computer science. By transforming systems from the rigid, instruction-based logic of traditional programming to systems inspired by the biological architecture of the human Neuron brain, ANNs have enabled machines to perform tasks—recognizing faces, translating languages, and even creating art. A Flow of Lines illustrates the development and understanding of artificial neural networks, mathematical mechanics, and control system flow diagrams to simplify complex mathematical tasks into simple, straightforward outputs (0/1) using multiple inputs (x1, x2, and x3) in a variety of architectures and ethical environments.

ANN Virtual Model

1. Historical Evolution

The Foundation (1943)

Neurophysiologist Warren McCulloch and mathematician Walter Pitts created the first computational neural network model using electrical circuits, proving that logic gates can be modeled mathematically.

The Perceptron (1958)

Frank Rosenblatt created the “perceptron,” the first successful implementation of an ANN. It was a single-layer network designed for image recognition, but it could only solve linearly separable problems.

The First AI Winter (1969)

Minsky and Papert proved that single-layer networks cannot solve the logical XOR (exclusive OR) problem, which led to a sharp decline in research funding.

The Resurgence (1980s)

Minsky and Papert proved that single-layer networks cannot solve the logical XOR (exclusive OR) problem, which led to a sharp decline in research funding.

The Deep Learning Era (2012–Present)

Big data and high-power GPUs like NVIDIA’s made deep learning practical. The success of AlexNet in ImageNet in 2012 started the current revolution and led to widespread use of LLM and AI like Chat GPT, Gemini, and others.

2. Mathematical Foundations

Weights, Summation & Bias

Every neuron calculates a weighted sum of inputs plus a bias to shift the activation Every neuron calculates a weighted(w) sum of all inputs(x) signals plus a bias(b) to shift the activation.

Activation Functions

- ReLU \( f(z) = \max(0, z) \) – The industry standard for efficiency.

- Sigmoid \( f(z) = \frac{1}{1 + e^{-z}} \) – Perfect for probability (0 to 1).

- Tanh Maps values between -1 and 1.

Algorithms & Optimization

The “loss landscape” represents the network’s error. Training is the process of navigating this valley to find the lowest point (minimum error when machining and calculating).

- Learning Rate: The size of the steps taken down the hill.

- Stochasticity: Adding randomness to avoid getting stuck in “puddles” (local minima).

The Learning Loop (Backpropagation):+Learning optimizing weights and biases

Fine-Tuning the Machine

Overfitting

When a network “memorizes” data instead of learning to understand the trend patterns shown above, it works well on training with a lot of data,but fails on new data.

Dropout

A regularization technique where neurons are randomly “turned off” and miss ((e.g., Mean Squared Error))information of input during training to prevent over-reliance on specific connections.

Batch Size

he number and size of training instance data must be processed before updating internal parameters.

3. Network Architectures

The Hierarchy of Features

Artificial neural networks learn from abstractions, with the lower layers detecting simple edges and the upper layers recognizing complex objects to be understood in diagram flow.

Feedforward (FNN)

Information travels in one direction. Best for basic classification word-based count tasks.

Spam FilteringConvolutional (CNN)

Uses filters to scan for spatial patterns in grids of pixels.

Facial RecognitionRecurrent (RNN/LSTM)

Features loops for “memory,” crucial for sequential time-series data.

Speech & StocksTransformers

Uses Attention Mechanisms to weigh word importance simultaneously.

Chat GPT & GeminiThe Black Box

As ANNs grow to 100 billion parameters, they become harder to interpret. This lack of transparency leads to the “Black Box Problem,” creating a need for Explainable AI (XAI).

Ethical Considerations

- Bias: Algorithms learn human biases.

- Privacy: Data scraping concerns.

- Energy: High carbon footprint.

- Jobs: Automation shift.