Computer Generation Fundamentals

The Evolution of Computing: From Vacuum Tubes to Quantum Tech with Artificial Intelligence(AI)

Introduction

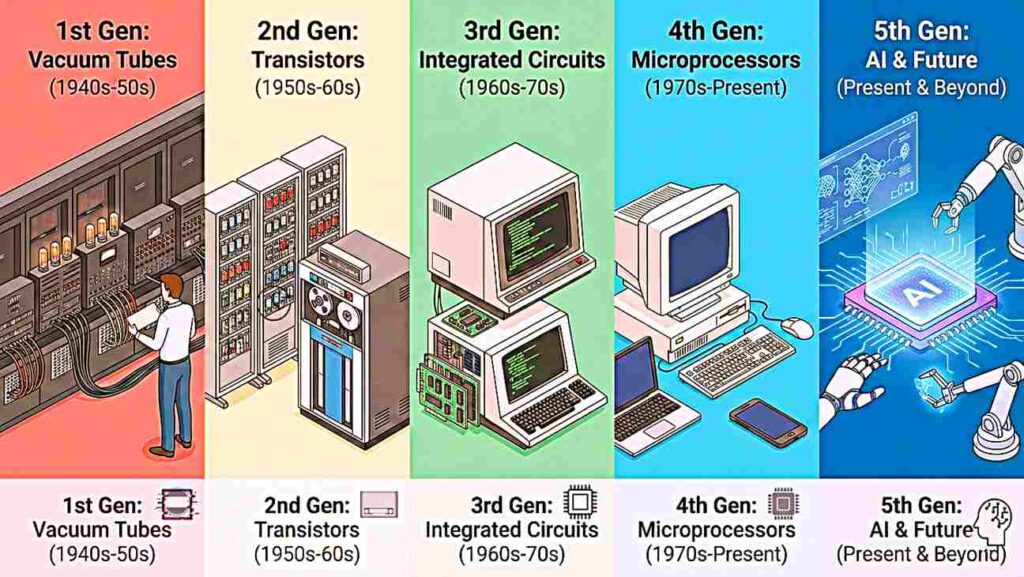

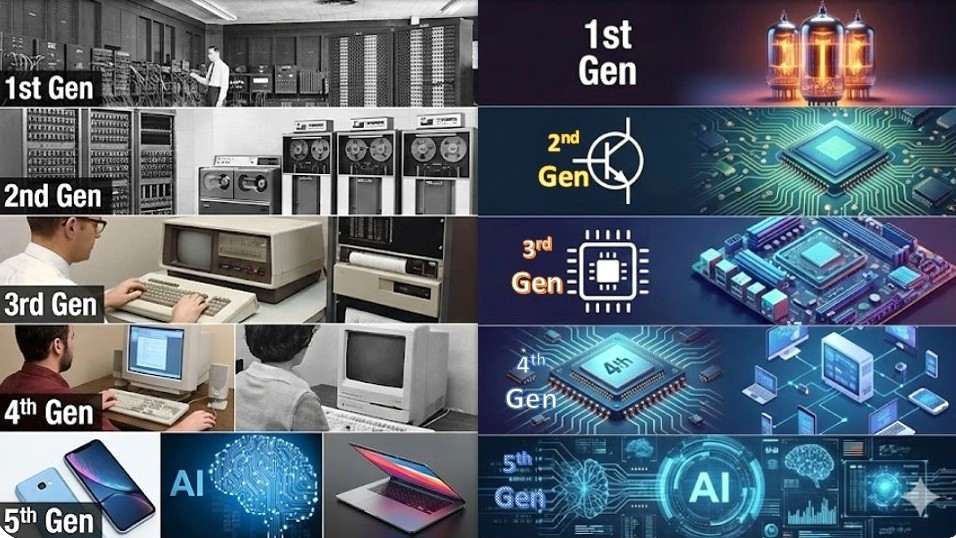

The history of computer development is often referred to in reference to the different generations of computing devices. Each generation is characterized by a major technological development that fundamentally changed the way computers operate, resulting in increasingly smaller, cheaper, more powerful, and more efficient and reliable devices.

Quick Computers Comparison Sheet

| Generation | Period | Key Technology | Main Language |

|---|---|---|---|

| 1st Generation | 1940 – 1956 | Vacuum Tubes | Machine Language |

| 2nd Generation | 1956 – 1963 | Transistors | Assembly Language |

| 3rd Generation | 1964 – 1971 | Integrated Circuits | High-Level (FORTRAN/Pascal) |

| 4th Generation | 1971 – Present | Microprocessors (VLSI) | Python, C++, Java, etc. |

| 5th Generation | Present – Future | AI & Quantum Tech | Natural Language |

First Generation (1940 – 1956): Vacuum Tubes

The first computers used vacuum tubes for circuitry and magnetic drums for memory. They were often enormous, taking up entire rooms.

Key Characteristics

- Used Vacuum Tube technology.

- Supported Machine Language only.

- Very costly and huge in size.

- Generated a lot of heat.

- Input was based on punched cards.

Examples

ENIAC, EDVAC, UNIVAC I, IBM-701, IBM-650.

Extended Technical Core & Architecture

Architecture Detail: The ENIAC processor utilized decimal rather than binary representation. It performed 5,000 additions per second. Hardware configuration was manual via plugboards and switches, making performance extremely slow compared to modern standards but 1,000 times faster than contemporary electro-mechanical machines.

Second Generation (1956 – 1963): Transistors

The transistor was far superior to the vacuum tube, allowing computers to become smaller, faster, cheaper, and more reliable than their predecessors.

Key Characteristics

- Use of transistors (Solid State).

- Primary memory: Magnetic cores.

- Secondary memory: Magnetic tapes/disks.

- Assembly language used.

- Reduced heat and power consumption.

Examples

IBM 1620, IBM 7094, CDC 1604, CDC 3600, UNIVAC 1108.

Extended Technical Core & Architecture

Architecture Detail: Second-gen processors moved to stored-program architecture with hardware registers. The CDC 1604, designed by Seymour Cray, featured a 48-bit word length and used approximately 25,000 transistors. It introduced the concept of cooling systems to maintain the solid-state components during long processing tasks.

Third Generation (1964 – 1971): Integrated Circuits

The development of the integrated circuit (IC) was the hallmark of this generation. Transistors were miniaturized and placed on silicon chips (semiconductors).

Key Characteristics

- Integrated Circuits (IC) used.

- Remote processing & Time-sharing.

- High-level programming languages.

- Smaller and more reliable.

- Keyboard and Monitor interface.

Examples

IBM 360 series, Honeywell-6000 series, PDP (Personal Data Processor).

Extended Technical Core & Architecture

Architecture Detail: The IBM 360 used SLT (Solid Logic Technology) which combined discrete components on a ceramic substrate, a bridge to full ICs. It introduced standard byte-addressable memory (8-bit bytes) and universal 32-bit registers. This modularity allowed for software compatibility across different machine models for the first time.

Fourth Generation (1971 – Present): Microprocessors

Thousands of integrated circuits were built onto a single silicon chip. What filled a whole room in the first generation could now fit in the palm of the hand.

Key Characteristics

- VLSI (Very Large Scale Integration).

- The concept of Personal Computers (PC).

- Development of the Internet and GUI.

- Distributed systems and Networks.

- C, C++, and DB development.

Examples

DEC 10, STAR 1000, Apple II, CRAY-1 (Super Computer).

Extended Technical Core & Architecture

Architecture Detail: The Intel 4004 was the first CPU on a single chip. Modern 4th-gen processors use pipelining and superscalar execution to process multiple instructions per clock cycle. Transition from CISC (Complex Instruction Set) to RISC (Reduced Instruction Set) architectures enabled mobile computing through high power efficiency.

Fifth Generation (Present – Beyond): Artificial Intelligence

Based on Artificial Intelligence (AI) and Ultra Large Scale Integration (ULSI), this generation aims to create devices capable of self-learning.

Future Horizons

- AI and Machine Learning.

- Parallel Processing.

- Quantum Computing.

- Natural Language Processing.

- Nanotechnology.

Modern Tech

Desktop, Laptop, UltraBook, ChromeBook, GPT-4, Quantum Processors.

Extended Technical Core & Architecture

Architecture Detail: Architecture has shifted from CPU-centric to GPU/NPU-centric for massively parallel AI workloads. Quantum computers use superposition and entanglement to solve non-linear equations. These systems utilize neural engines and transformer-based accelerators to perform quadrillions of matrix multiplications per second.

Summary Conclusion

Analyzing these generations reveals a clear pattern: computing is steadily progressing towards complete integration and intelligent reasoning. From vacuum tubes, which could only perform simple calculations, to today’s AI and quantum models that can think, create original content, and operate at lightning speed in mere milliseconds, we are entering an era where the line between hardware and thought is blurring. Understanding these fundamental concepts is crucial for anyone aspiring to become a technologist or computer scientist.